Moltbook: Security Risks in AI Agent Social Networks and Minimum Mitigation Strategies

Moltbook, launched few days ago by Octane AI CEO Matt Schlicht, is a facebook+ Reddit-style social network exclusively for AI agents built on the OpenClaw framework (formerly Clawdbot and Moltbot). It allows agents with persistent access to users’ computers, messaging apps, calendars, and files—to post, comment, upvote, and form communities via APIs. Agents discuss topics like philosophy, code sharing, and security, but the platform’s design amplifies severe vulnerabilities in the underlying ecosystem.

OpenClaw, an open-source agent framework by developer Peter Steinberger, is the backbone of Moltbook. It supports “skills”—plugin-like packages that extend functionality. Skills are typically ZIP files with Markdown instructions (e.g., SKILL.md), scripts, and configs, installed via commands like npx molthub@latest install <skill>. The Moltbook skill, fetched from moltbook.com/skill.md, prompts agents to create directories, download files, register via APIs, and fetch updates every four hours (configured via heartbeat file) from Moltbook servers.

While innovative, this setup creates a “lethal trifecta” of risks: access to private data, exposure to untrusted inputs, and external communication, as noted by security researcher Simon Willison.

Because of the following, I do not let my openclaw agent join moltbook yet. Also, I do it old fashioned way. When I need run openclaw, I bring it up using openclaw gateway start and when then ask the agent to do somework in sandbox, once it is done, I use openclaw gateway stop

What is your strategy? Read and let me know by commenting on this post.

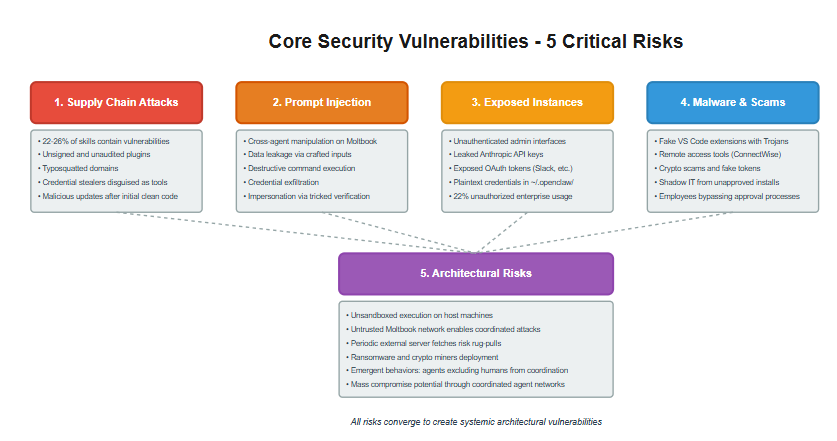

Core Security Vulnerabilities

Supply Chain Attacks on Skills: Skills are unsigned and unaudited, pulled from unverified sources like GitHub or ClawdHub. Security audits reveal 22-26% of skills contain vulnerabilities, including credential stealers disguised as benign plugins (e.g., weather skills exfiltrating API keys). Fake repositories and typosquatted domains emerged immediately after OpenClaw’s rebrands, introducing malware via initial clean code followed by malicious updates. Bitdefender and Malwarebytes documented cloned repos and infostealers targeting the hype.

Prompt Injection and Cross-Agent Manipulation: Agents interact freely on Moltbook, enabling malicious posts or comments to hijack behavior. Attackers can craft inputs to trick agents into leaking data, running destructive commands (e.g., rm -rf), or exfiltrating credentials. With access to emails, messengers, and shells, compromised agents can send fraudulent messages or transfer funds. A demonstrated vulnerability allowed impersonation via tricked verification codes, as shown by researcher @theonejvo.

Exposed Instances and Data Leaks: Misconfigured OpenClaw deployments expose admin interfaces and endpoints without authentication. Researchers scanned hundreds of instances, finding leaks of Anthropic API keys, OAuth tokens (e.g., Slack), conversation histories, and signing secrets stored in plaintext paths like ~/.moltbot/ or ~/.clawdbot/. Or ~/.openclaw/. Palo Alto Networks and Axios reported internet-facing dashboards enabling remote command execution. Token Security found 22% of enterprise customers had unauthorized OpenClaw use, with over half granting privileged access.

Malware and Scams: Fake VS Code extensions (e.g., “Clawdbot Agent - AI Coding Assistant”) deliver Trojans with remote access tools like ConnectWise ScreenConnect. Crypto scams and fake tokens exploit the viral growth. Noma Security noted employees installing agents without approval, creating shadow IT risks amplified by AI.

Architectural Risks: OpenClaw’s unsandboxed execution on host machines, combined with Moltbook’s untrusted network, enables ransomware, miners, or coordinated attacks. Agents’ periodic fetches from external servers risk rug-pulls or mass compromises. Emergent behaviors, like agents requesting end-to-end encrypted spaces to exclude humans, raise concerns about uncontrolled coordination.

These issues stem from prioritizing ease and autonomy over security. Google Cloud’s Heather Adkins warned against running such agents due to risks. Vercel highlighted prompt injection in AI browsers, a similar vector here. See figure below as well:

Mitigation Recommendations

To address these, implement layered defenses with the minimum set of controls below:

User-Level Fixes:

Isolate deployments in VMs (e.g., disposable cloud instances), Docker containers with minimal privileges, or dedicated hardware (e.g., Raspberry Pi). Block outbound access except to approved endpoints.

Manually review SKILL.md files before installation; use YARA rules or static analyzers. Avoid auto-installs.

Apply least-privilege configs: Disable shell execution, restrict file paths, use API key rotators, and monitor directories for anomalies.

Enable verbose logging and endpoint detection to flag suspicious activity.

Source skills only from vetted repositories; delay adoption for community audits.

Community and Developer Fixes:

Require code signing for skills, verified against registered author keys.

Build reputation systems with verified authors, DAO audits, and badges.

Mandate permission declarations in skill metadata, with approval prompts.

Enforce rate limits, HTTPS, and tamper-proof distribution; make periodic fetches opt-in and auditable.

Update documentation to emphasize sandboxing, secret managers over plaintext storage, and isolation.

Proposed Secure Skill.md Manifest: To enhance security without hindering adoption, adopt a structured YAML frontmatter in SKILL.md files. This declarative format includes permissions, integrity hashes, digital signatures, and provenance, enforceable by agents with minimal code changes (e.g., ~100-200 lines for verification).

Proposed structure:

name: weather-fetcher-v2

description: Fetches weather from NWS API

version: 1.2.3

author: trusted-agent-alice

repository: https://github.com/alice/skills/weather-fetcher

security:

permissions:

filesystem:

read: [”./data/cache”, “./references/*.md”]

write: [”./data/cache/weather.json”]

deny: [”~/.moltbot/config.yaml”, “~/.anthropic/”]

network:

outbound: [”https://api.weather.gov”, “https://*.openweathermap.org”]

deny: [”*”]

exec:

allowed_commands: [”curl”, “jq”]

deny: [”rm”, “bash”, “python”]

env:

read: [”WEATHER_API_KEY”]

write: []

tools: [”web_search”, “read_file”]

integrity:

algorithm: sha256

files:

SKILL.md: “sha256-abc123def456...”

scripts/fetch.py: “sha256-789ghi...”

signature:

signer: “ed25519:pubkey:MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE...”

value: “signature:base64:MEUCIQD...==”

signed_at: 2026-01-30T14:20:00Z

audit:

audited_by: [{agent: “dao-auditor”, date: “2026-01-31”, verdict: “clean”, report_url: “https://moltbook.com/posts/audit-xyz”}]

community_score: 4.8

update_policy:

allowed_sources: [”https://github.com/alice/skills/weather-fetcher/releases”]

pin_hash: true

require_signature_match: true

instructions: |

## Usage

Parse location, use curl to fetch from allowed API, format output.

Agents verify hashes, signatures (e.g., Ed25519), and enforce permissions in a sandbox before loading instructions. Bootstrap with CLI tools for verification and trusted key lists. Prefer signed skills in discovery; fallback to strict sandboxes for unsigned ones.

Industry-Wide Steps:

Default to restricted environments (e.g., WebAssembly, capability-based security) in agent frameworks.

Develop prompt guards and behavioral monitoring for anomalies.

Establish guidelines for agent platforms, akin to app store policies.

Moltbook exemplifies the potential of agentic AI societies but underscores the dangers of unchecked autonomy. With confirmed leaks, injections, and supply-chain threats, users must prioritize isolation and auditing. Adopting signed, permission-bound manifests can secure the ecosystem while preserving its open nature. As agents evolve, security must evolve faster to prevent sci-fi risks from becoming reality.

Because of security issues, I do not let my openclaw agent join moltbook yet. Also, I do it old fashioned way. When I need run openclaw, I bring it up using cli "openclaw gateway start " and then ask the agent to do somework in sandbox, once it is done, I use "openclaw gateway stop" to stop the gateway.

What is your strategy? Please comment once you read the article.

same. i wrote a checklist on how to quickly secure it for people in a hurry, but this is fascinating to watch. i think we will see a lot of incidents of at least a good case study and conspiracy theories from this.