The “Rule of Two” vs. Reality: Why Meta’s Agent Safeguards Don’t Cover All Core Agentic AI Risks

Recently(October 31, 2025), Meta AI published an article introducing the “Agents Rule of Two” that offers a pragmatic framework to address prompt injection vulnerabilities, aiming to reduce the highest impact consequences by limiting an agent’s simultaneous access to untrustworthy inputs, sensitive data, and external communication or state change.

While a commendable and necessary step, the “Agents Rule of Two” – which states an agent should satisfy no more than two of [A] processing untrustworthy inputs, [B] accessing sensitive systems/private data, and [C] changing state/communicating externally within a session – focuses predominantly on mitigating prompt injection attacks on a single agent. However, a deeper dive into the OWASP AIVSS defined core agentic AI risks reveals a far more complex and interconnected threat landscape that demands a more holistic approach.

Let’s unpack why the “Rule of Two,” while valuable, leaves critical gaps in securing the agentic future, as illuminated by the OWASP AIVSS defined core agentic AI risks.

The “Rule of Two”: A Good Start, But Not the Finish Line

The “Agents Rule of Two” is ingenious in its simplicity: by preventing an agent from possessing all three “lethal trifecta” properties simultaneously, it aims to break the kill chain for many prompt injection attacks. For instance, an Email-Bot that can process untrusted emails [A] and access your inbox [B] won’t be able to send replies externally [C] without human approval, thus preventing data exfiltration.

As another example, consider a “Travel Assistant Agent” designed to help you plan trips. This agent might be configured as an [AB] agent: it can process untrustworthy inputs like public web search results for flight prices and hotel availability [A], and it can access your sensitive preferences and payment information to make bookings [B]. However, to adhere to the Rule of Two, it would not be allowed to change state or communicate externally [C] without explicit human approval. This means if you ask it to “Book the cheapest flight to Paris,” it might find the flight and prepare the booking details using your payment info, but it would then prompt you for a final “Are you sure you want to book?” before actually making the reservation. This prevents a prompt injection in a web search result from directly causing an unauthorized purchase.

This is excellent for containing individual agent compromises originating from prompt injection. But what happens when the attack vector isn’t a single, isolated prompt, or when the systemic interactions between agents create new vulnerabilities? This is where the OWASP AIVSS defined core agentic AI risks provide a crucial, expanded perspective.

Critical OWASP AIVSS Defined Core Agentic AI Risks Not Fully Covered by the “Rule of Two”

The “Rule of Two” primarily tackles a single agent’s direct capabilities (A, B, C) in a given session. It doesn’t inherently address the complexities arising from multi-agent interactions, persistent state, identity management, or the specific dangers of interacting with critical infrastructure.

Here are key OWASP AIVSS defined core agentic AI risks that require additional layers of security beyond the “Rule of Two”:

OWASP AIVSS Defined Core Agentic AI Risk #3: Agent Cascading Failures

The Gap: While the “Rule of Two” might prevent an initial prompt injection from having maximum impact on a single agent, it doesn’t intrinsically stop a security compromise in one agent from creating ripple effects across an entire interconnected ecosystem. Imagine a scenario where a subtly compromised agent, limited by the “Rule of Two,” still manages to introduce flawed data into a shared knowledge base. This flawed data could then be consumed by other agents, each individually compliant with the “Rule of Two,” leading to collectively damaging outcomes. The propagation of hallucinations across agents, or “SaaS-to-SaaS Pivoting” where one agent’s compromise abuses pre-authorized integrations to impact downstream SaaS applications, are systemic risks far beyond the scope of a single agent’s A/B/C properties.

OWASP AIVSS Defined Core Agentic AI Risk #4: Agent Orchestration and Multi-Agent Exploitation

The Gap: This OWASP AIVSS defined core agentic AI risk directly targets vulnerabilities in how multiple AI agents interact and coordinate. The “Rule of Two” focuses on individual agent constraints. It doesn’t secure “Inter-Agent Communication Exploitation,” “Shared Knowledge Poisoning,” “Trust Relationship Abuse” between cooperating agents, or “Coordination Protocol Manipulation.” An attacker could exploit the orchestration layer itself, manipulating how agents are combined or what shared resources they access, effectively bypassing the spirit of the “Rule of Two” even if individual agents adhere to it. For example, manipulating a multi-agent system’s shared memory could lead to widespread misinterpretations without any single agent processing “untrustworthy input” in the traditional sense.

OWASP AIVSS Defined Core Agentic AI Risk #5: Agent Identity Impersonation

The Gap: This OWASP AIVSS defined core agentic AI risk deals with agents impersonating other agents or humans (e.g., via deepfakes), exploiting weaknesses in identity verification, or leveraging “Shared Identity Pools.” While an impersonating agent might utilize properties [B] and [C] to execute its malicious intent, the “Rule of Two” doesn’t provide a framework for robust agent identity, authentication, or non-repudiation. It doesn’t prevent “Compromised Agent Identity Verification” or the “Unauthorized Cloning of Agents or Humans.” These are foundational trust issues that require a dedicated identity layer, cryptographic attestation, and secure credential management, which are outside the remit of [A], [B], [C] property limitations.

OWASP AIVSS Defined Core Agentic AI Risk #6: Agent Memory and Context Manipulation

The Gap: While a prompt injection (covered by the “Rule of Two”) can be a form of context manipulation, this OWASP AIVSS defined core agentic AI risk goes deeper. It includes “Context Amnesia Exploitation” (where an agent forgets security constraints), “Cross-Session Data Leakage,” “Cross-User Data Leakage,” and “Residual Memory Exploitation.” These persistent vulnerabilities stem from improper memory isolation, management, and lifecycle across sessions or users, rather than solely from a single untrustworthy input within a session. The “Rule of Two” is session-specific and input-focused, missing the long-term, cross-cutting implications of memory flaws.

OWASP AIVSS Defined Core Agentic AI Risk #7: Insecure Agent Critical Systems Interaction

The Gap: The “Rule of Two” advises limiting properties [B] and [C] to mitigate high-impact prompt injections. However, this OWASP AIVSS defined core agentic AI risk highlights the specific, often catastrophic, consequences when agents interact with critical infrastructure (e.g., water treatment plants, power grids, CI/CD pipelines, IoT devices). Risks like “Physical System Manipulation” or “Unintended Automated Critical Decisions or Actions” require not just limiting capabilities but also implementing rigorous intermediary security controls, human-in-the-loop mechanisms, safe default states, and resilient feedback loops designed for physical-world interaction. The “Rule of Two” is a design constraint; it’s not a framework for securing the interface to critical systems.

OWASP AIVSS Defined Core Agentic AI Risk #9: Agent Untraceability

The Gap: This OWASP AIVSS defined core agentic AI risk concerns the inability to accurately determine the sequence of events, identities, and authorizations leading to an agent’s actions – a “forensic black hole.” While a prompt injection (covered by the “Rule of Two”) might initiate an untraceable action, the “Rule of Two” doesn’t provide logging, auditing, or forensic capabilities. Risks like “Log Tampering or Absence,” “Loss of Chain-of-Action (Repudiation Risk),” or “Explainability artifact poisoning” are about accountability and observability, which are separate but equally critical concerns when building secure agentic systems.

OWASP AIVSS Defined Core Agentic AI Risk #10: Agent Goal and Instruction Manipulation

The Gap: While prompt injection is a key attack vector here, this OWASP AIVSS defined core agentic AI risk delves into the nuances of subverting an agent’s purpose, including “Semantic Ambiguity Exploitation,” “Complex Goal Hijacking” through instruction chains, and “Dynamic Goal Steering.” The “Rule of Two” aims to prevent the consequences of prompt injection, but it doesn’t directly address the inherent challenge of translating human intent into secure machine commands or the sophisticated, multi-step manipulation tactics that attackers can employ to gradually shift an agent’s goals, even within the confines of A, B, or C limitations.

Proposing a Better Approach: Zero-Trust Agentic AI

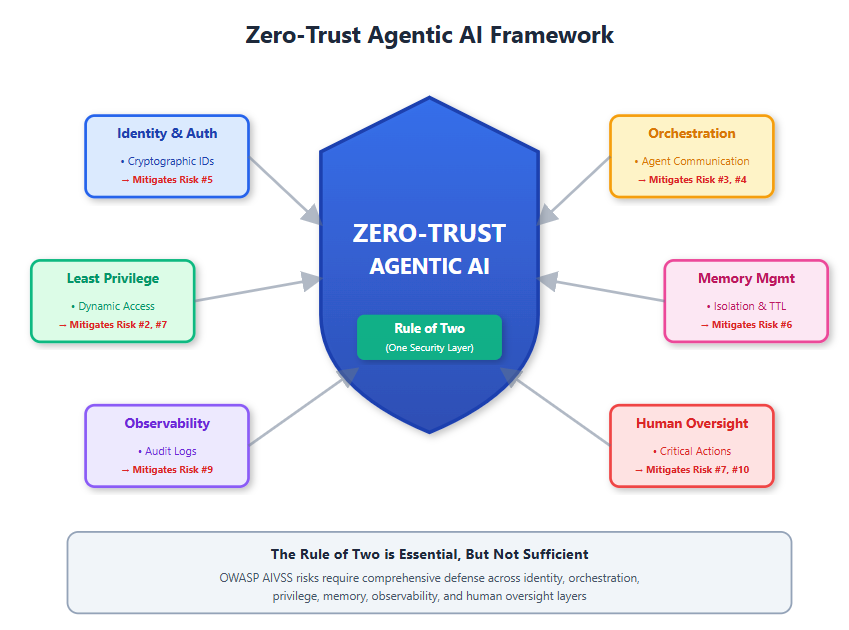

To truly secure the agentic future, we need to move beyond single-vulnerability mitigation and embrace a more comprehensive, architectural approach. Inspired by the principles of Zero-Trust security, I propose a Zero-Trust Agentic AI framework, which inherently assumes no agent, no interaction, and no data is trustworthy by default, regardless of its origin or prior access.

This framework would build upon the “Agents Rule of Two” as a crucial component, but integrate it into a layered defense that addresses the broader OWASP AIVSS defined core agentic AI risks.

Here are the pillars of a Zero-Trust Agentic AI approach:

Strict Identity & Authentication for All Agents (Addressing OWASP AIVSS Defined Core Agentic AI Risk #5):

Every agent, human, and system must have a verifiable, cryptographically attested identity.

Implement strong, mutually authenticated communication between agents (Agent-to-Agent, A2A) and between agents and tools/systems.

No shared service accounts or generic API keys. Each agent (or agent instance) should have its own unique, short-lived credentials.

Action: Implement frameworks like the Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs) for agent identities, ensuring cryptographic proof of origin and role.

Least Privilege & Dynamic Authorization (Reinforcing “Rule of Two” and Addressing OWASP AIVSS Defined Core Agentic AI Risk #2, #7):

Agents should only be granted the absolute minimum permissions necessary for their current task, revoked immediately upon completion.

Implement fine-grained access controls at every layer (data, tools, systems), evaluated in real-time.

The “Rule of Two” becomes a core design principle here, ensuring that even when an agent has some access, it’s not a free pass.

Action: Implement context-aware authorization policies that dynamically adjust an agent’s permissions based on task, data sensitivity, and verified intent, rather than static role assignments.

Secure Inter-Agent Communication & Orchestration (Addressing OWASP AIVSS Defined Core Agentic AI Risk #3, #4):

All communication channels between agents must be encrypted, integrity-protected, and authenticated.

Establish secure protocols for agent orchestration, ensuring that coordination logic is tamper-proof and shared knowledge bases are protected against poisoning.

Implement mechanisms to detect “Harmful Collaboration” and “Quorum Manipulation” by monitoring collective agent behavior for anomalies.

Action: Develop an Agent-to-Agent (A2A) protocol with built-in security for message signing, integrity checks, and secure state synchronization for shared context.

Robust Memory & Context Management (Addressing OWASP AIVSS Defined Core Agentic AI Risk #6):

Enforce strict memory isolation between users and sessions. Sensitive data should be ephemeral and encrypted at rest.

Implement “context refresh” mechanisms to prevent “Context Amnesia Exploitation” and ensure security constraints are always active.

Regularly purge residual memory and implement strong data retention policies compliant with privacy regulations.

Action: Design memory architectures with granular access controls and time-to-live (TTL) settings for sensitive context, preventing unintended leakage or long-term manipulation.

Comprehensive Observability & Untraceability Mitigation (Addressing OWASP AIVSS Defined Core Agentic AI Risk #9):

Mandatory, immutable logging of all agent actions, tool invocations, decisions, and data access, with unique correlation IDs across systems.

Real-time monitoring and alerting for anomalous agent behavior, unauthorized actions, or “Log Tampering.”

Implement “Chain-of-Action” tracking to attribute every agent action back to its instructing origin and human actor, ensuring non-repudiation.

Action: Deploy an Agent Security Information and Event Management (AI-SIEM) system that aggregates and correlates agent logs from all integrated systems to provide a complete forensic trail.

Human Oversight & Verification for Critical Actions (Complementing “Rule of Two” and Addressing OWASP AIVSS Defined Core Agentic AI Risk #7, #10):

For interactions with critical systems or actions with high-impact consequences, a human-in-the-loop approval mechanism is non-negotiable.

Design clear “pause” or “rollback” functionalities for agents operating in sensitive environments.

Implement robust semantic analysis and intent verification mechanisms to detect “Semantic Ambiguity Exploitation” and subtle “Goal Manipulation” tactics before execution.

Action: Implement “safety agents” or “oversight agents” that monitor the behavior of task-specific agents and can flag or halt actions that deviate from intended, safe goals, requiring human intervention.

Supply Chain Security for All AI Components (Addressing OWASP AIVSS Defined Core Agentic AI Risk #8):

Scrutinize the provenance and integrity of all pre-trained models, datasets, libraries, and external tools used by agents.

Implement automated scanning for vulnerabilities in agent dependencies and enforce secure development practices throughout the agent’s lifecycle.

Action: Establish a “Software Bill of Materials (SBOM)” for every agent, detailing all its components, dependencies, and their security posture, and integrate this into CI/CD pipelines.

The Road Ahead?

The “Agents Rule of Two” is a vital and pragmatic step in safeguarding agentic AI against prompt injection. Meta deserves credit for proposing a concrete framework for this prevalent vulnerability. However, as the OWASP AIVSS defined core agentic AI risks clearly demonstrate, the security of agentic systems is a multifaceted challenge.

By adopting a comprehensive Zero-Trust Agentic AI approach, we can build a more resilient and trustworthy ecosystem. This involves not just limiting an agent’s capabilities in a session, but fundamentally redesigning how agents are identified, how they communicate, how they manage state, and how their actions are audited across their entire lifecycle and interconnected environments. Only by addressing the full spectrum of OWASP AIVSS defined core agentic AI risks can we truly unlock the transformative potential of agentic AI safely and responsibly.

The following diagram captures a critical insight in AI security: point solutions cannot address systemic risks. While Meta’s “Rule of Two” effectively constrains individual agent capabilities to prevent prompt injection attacks, the emerging threat landscape of agentic AI demands architectural thinking, not just tactical controls.

The visual metaphor is deliberate - a central shield representing Zero-Trust principles, with the Rule of Two positioned as just one layer within. This reflects a fundamental truth: modern AI security requires defense-in-depth. Six distinct security pillars surround the core, each addressing gaps that the Rule of Two cannot fill alone. These pillars represent the evolution from “constraining what an agent can do” to “securing how agents exist, communicate, remember, and act across their entire lifecycle.”

The key insight is architectural: agentic AI risks are interconnected, not isolated. An agent’s identity (how we know who it is), its orchestration (how it coordinates with others), its memory (what it retains across sessions), and its observability (whether we can audit its actions) are all distinct security domains. A compromise in any one area can cascade across the system, regardless of individual agent constraints.

The diagram also reveals a strategic tension in AI security: automation versus control. The Rule of Two limits automation to reduce risk. But the six pillars show we can achieve both - through cryptographic identity, dynamic authorization, secure protocols, memory isolation, comprehensive logging, and human oversight, we can enable powerful agentic capabilities while maintaining security boundaries.

Ultimately, this framework represents a shift from reactive protection to proactive trust architecture - moving beyond “what could go wrong with this agent” to “how do we build trustworthy agent ecosystems from the ground up.”

If you have time, please also watch my keynote speech at RSA 2025 about the same topic.