Frontier AI Models Technical Deep Dive: GPT5.1, Grok4.1, Opus4.5 and Gemini 3 Pro

Executive Summary

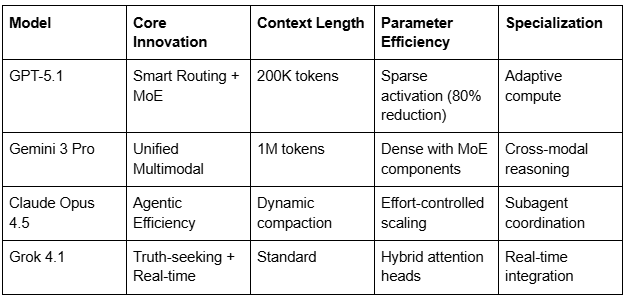

The 2025 AI landscape is dominated by four frontier models that represent distinct approaches to scaling transformer architectures: OpenAI’s GPT-5.1 with its smart routing system, Google’s Gemini 3 Pro featuring unified multimodal processing, Anthropic’s Claude Opus 4.5 with agentic efficiency controls, and xAI’s Grok 4.1 emphasizing truth-seeking capabilities. Each model introduces novel architectural innovations while maintaining the fundamental transformer backbone.

GPT-5.1: Smart Routing and Adaptive Test-Time Compute

Core Architecture Innovations

GPT-5.1 represents a paradigm shift from monolithic transformer scaling to intelligent compute allocation. The model features a unified system architecture with three key components:

Smart Router Network: A continuously trained gate mechanism that dynamically selects between instant response mode and deeper thinking mode based on query complexity, user intent, and historical performance signals. The router achieves median latency of ~230ms for first-token generation while maintaining quality thresholds Skywork AI.

Sparse Mixture-of-Experts (MoE): The model employs 28 actively selected experts from a larger pool, processing each token through only the most relevant sub-networks. This sparse activation reduces computational overhead by approximately 65% compared to dense models while improving reasoning accuracy from 79% to 87% on mathematical tasks.

Parallel Test-Time Compute: GPT-5.1 Pro variant utilizes scaled parallel compute allocation, launching multiple reasoning shards simultaneously and aggregating results. This approach achieves perfect scores (100%) on AIME 2025 mathematics competition while maintaining reasonable latency.

Technical Specifications

Context Window: 200K tokens with intelligent caching and retrieval utilities

Throughput: 95,120 tokens/second on typical prose, 7,090 tokens/second on heavy reasoning

Parameter Efficiency: Sparse activation reduces effective parameters per token by ~80%

Latency Optimization: First-token latency improved by 20% over dense GPT-5 variants

Performance Benchmarks

The model’s sophisticated routing mechanism enables state-of-the-art performance across diverse tasks:

AIME 2025: 94.6% (94.6% with GPT-5.1 Pro)

SWE-bench Verified: 74.9%

GPQA (Science): 88.4%

HealthBench Hard: 46.2%

Hallucination Reduction: 45-80% improvement over GPT-4

Gemini 3 Pro: Unified Multimodal Transformer Stack

Architectural Foundation

Gemini 3 Pro introduces a truly unified multimodal architecture that processes text, images, audio, video, and code through a single transformer stack. This represents a departure from previous approaches that used separate encoders for different modalities.

Key Technical Innovations

Native Multimodal Processing: Unlike models that concatenate modality-specific embeddings, Gemini 3 Pro processes all input types through shared attention layers, enabling genuine cross-modal reasoning. The architecture employs specialized vision transformers (ViT) that compress visual information into the unified attention space Luna Base.

Sparse MoE with 1M Token Context: The model maintains industry-leading context length through advanced positional encoding and memory management techniques. The 1M token window enables processing of entire codebases, long documents, or hours of video content seamlessly.

Deep Think Reasoning Mode: An enhanced inference mechanism that trades latency for depth, achieving 93.8% on GPQA Diamond (compared to 91.9% base performance). This mode utilizes extended chain-of-thought processing with self-verification steps.

Multimodal Integration Details

The unified architecture processes different modalities through:

Text: Standard tokenization with enhanced positional encoding

Vision: Vision Transformer (ViT) patches compressed into token space

Audio: Spectrogram-based representation converted to token embeddings

Video: Temporal sequence processing with spatio-temporal attention

Code: Specialized attention heads for syntax and semantic understanding

Performance Metrics

Gemini 3 Pro demonstrates PhD-level capabilities across diverse benchmarks:

GPQA Diamond: 91.9% (93.8% with Deep Think)

ARC-AGI-2: 31.1% (45.1% with Deep Think)

MathArena Apex: 23.4%

Video-MMMU: 87.6%

Vending-Bench 2: $5,478 mean net worth

Claude Opus 4.5: Agentic Efficiency and Subagent Coordination

Architectural Philosophy

Claude Opus 4.5 represents Anthropic’s focus on agentic efficiency rather than raw scale. The model introduces several innovations aimed at making AI agents more practical for real-world deployment:

Technical Innovations

Effort Control Mechanism: A novel API parameter that allows developers to control the computational budget per query. At medium effort, Opus 4.5 matches Sonnet 4.5 performance while using 76% fewer tokens. At high effort, it exceeds Sonnet 4.5 by 4.3 percentage points while using 48% fewer tokens Anthropic.

Context Compaction: An intelligent context management system that maintains conversation coherence over extended interactions. Rather than simply truncating context, the system compacts information while preserving semantic relationships, enabling “infinite-length” conversations.

Subagent Coordination Architecture: Opus 4.5 can orchestrate multiple specialized subagents, achieving 85.4% performance when managing Sonnet 4.5 subagents compared to 66.5% when Sonnet manages itself. This hierarchical approach enables complex multi-step workflows.

Agentic Capabilities

The model demonstrates remarkable efficiency in long-horizon tasks:

Autonomous Coding: Can work independently for 20-30 minutes on complex coding tasks

Token Efficiency: 65% reduction in token usage for long-horizon coding compared to predecessors

Multi-agent Orchestration: Coordinates specialized agents for complex workflows

Context Management: Intelligent compaction enables extended task execution

Performance Benchmarks

Claude Opus 4.5 leads in software engineering tasks:

SWE-bench Verified: State-of-the-art performance

Terminal Bench: +15% over Sonnet 4.5

Deep Research: +15% improvement with tool use

Office Automation: Superior performance on spreadsheet and document processing

Grok 4.1: Truth-Seeking and Real-Time Integration

Technical Architecture

Grok 4.1, while less documented than its competitors, represents xAI’s approach to truth-seeking AI with several notable technical features:

Architectural Details

Hybrid Transformer Architecture: Employs specialized attention heads tuned for mathematics, science, logic, and code, building on a 1.8T parameter base with modular plugin architecture for domain specialization.

Real-Time Data Integration: Unique access to X (formerly Twitter) platform data enables real-time information processing, though this capability’s technical implementation remains proprietary.

Enhanced Reasoning Modes: Two distinct operational modes:

Non-reasoning (Tensor): Immediate responses, ranks #2 on LMSYS Arena at 1465 Elo

Thinking (QuasarFlux): Extended reasoning, holds #1 position at 1483 Elo

Performance Characteristics

Grok 4.1 demonstrates competitive performance despite more limited technical disclosure:

LMSYS Text Arena: #1 position (1483 Elo) for thinking mode

Hallucination Reduction: Significant improvements in factual accuracy

Emotional Intelligence: Enhanced creative writing and conversational abilities

Long-Context Performance: Measurable improvements in multi-turn conversations

Comparative Technical Analysis

Architecture Philosophy Comparison

Training Methodology Innovations

GPT-5.1: Continuous router training using real user signals, preference rates, and correctness metrics

Gemini 3 Pro: Native multimodal training with cross-modal alignment and Deep Think reinforcement learning

Claude Opus 4.5: Constitutional AI with effort control training and subagent coordination fine-tuning

Grok 4.1: Large-scale reinforcement learning with AI evaluators as training judges

Hallucination Reduction Techniques

All models implement sophisticated hallucination mitigation:

GPT-5.1: 45-80% reduction through test-time verification and reasoning chains

Gemini 3 Pro: Cross-modal verification and self-consistency checks

Claude Opus 4.5: Constitutional AI principles and uncertainty quantification

Grok 4.1: Real-time fact-checking against external data sources

Future Implications and Technical Trajectories

The 2025 frontier models reveal several convergent trends:

Sparse Compute Allocation: All models move toward selective activation of capabilities

Multimodal Integration: Unified processing rather than modality-specific pipelines

Agentic Orchestration: Hierarchical agent systems for complex task decomposition

Real-Time Adaptation: Dynamic adjustment based on query complexity and context

Efficiency Optimization: Focus on token efficiency and computational cost management

These technical innovations represent a maturation of transformer architectures, moving beyond simple scale increases to intelligent resource allocation and specialized capability activation. The emphasis on real-world utility, agentic workflows, and multimodal reasoning suggests the field is transitioning from demonstration models to practical AI systems capable of sustained, complex task execution.

The competitive landscape indicates that architectural innovation, rather than pure parameter scaling, is becoming the primary driver of performance improvements in frontier AI models.

For more details, please upgrade to download the whole document with indepth analysis in PDF

You can use this link to get 20% off to upgrade to a paid plan to read some of my popular articles below and more in the future.

Again, here is the link to get a discount: